UPDATE: WE’ve secured some steadyness in the service. Time for a best practices for the masses.

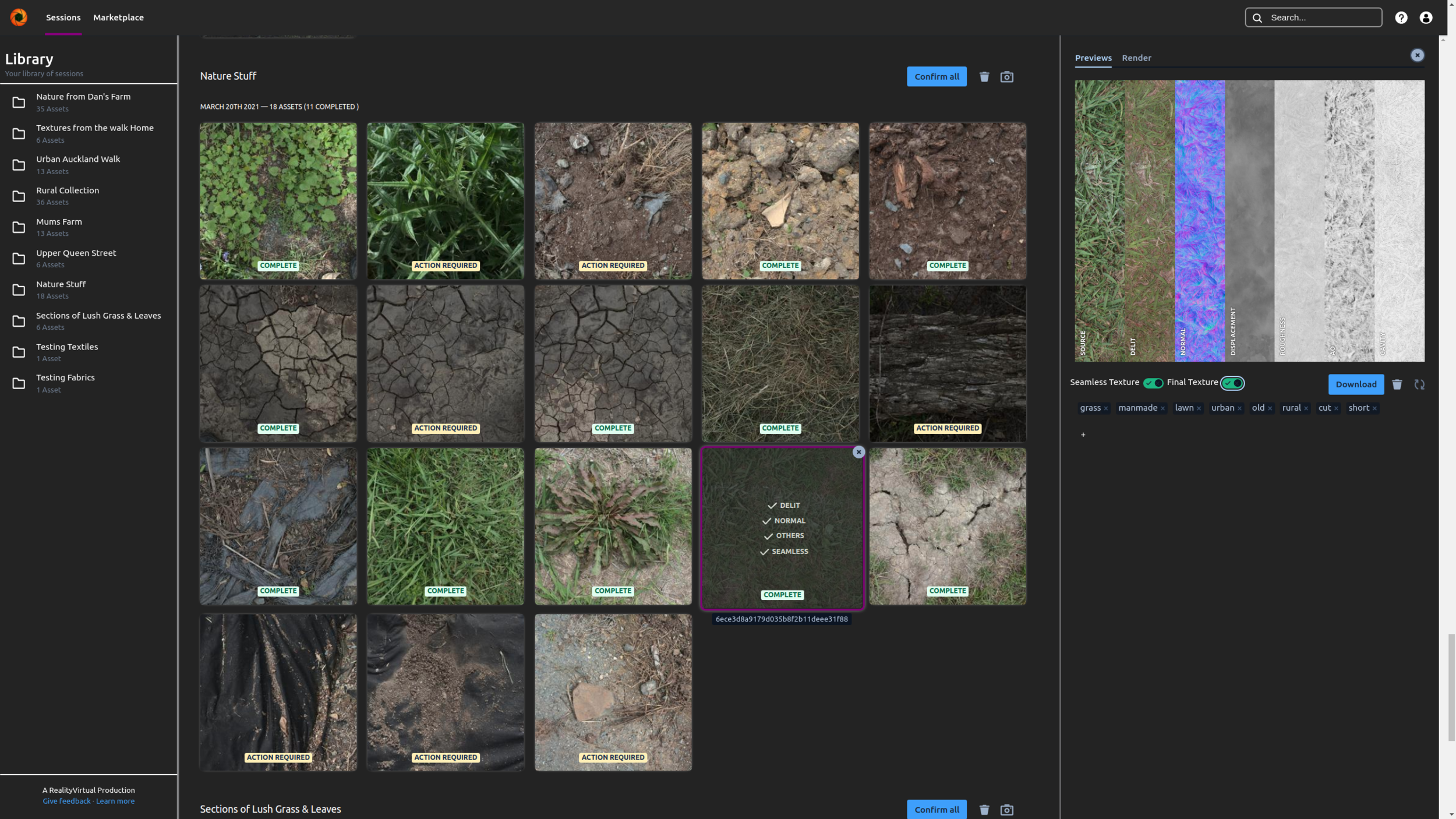

The main page of deepPBR. All your textures well organised and tagged.

What is deepPBR?

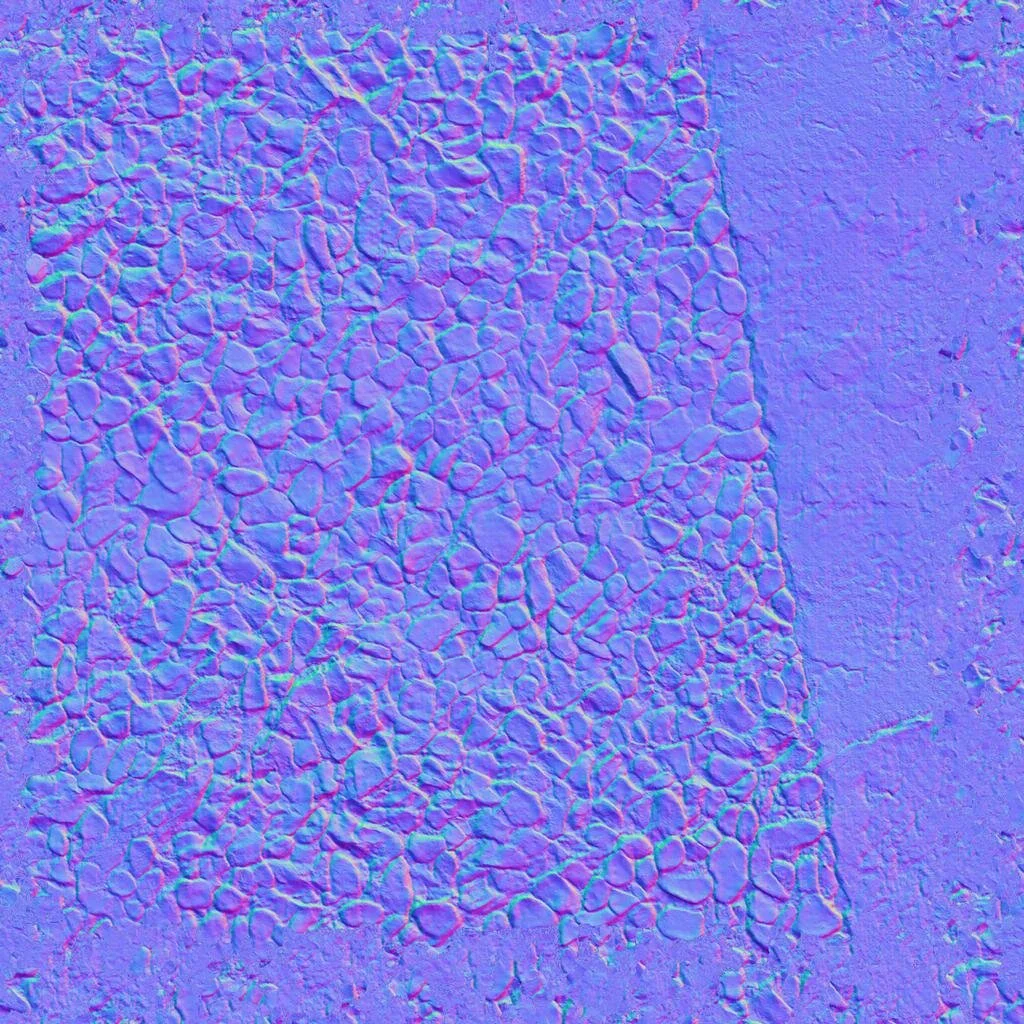

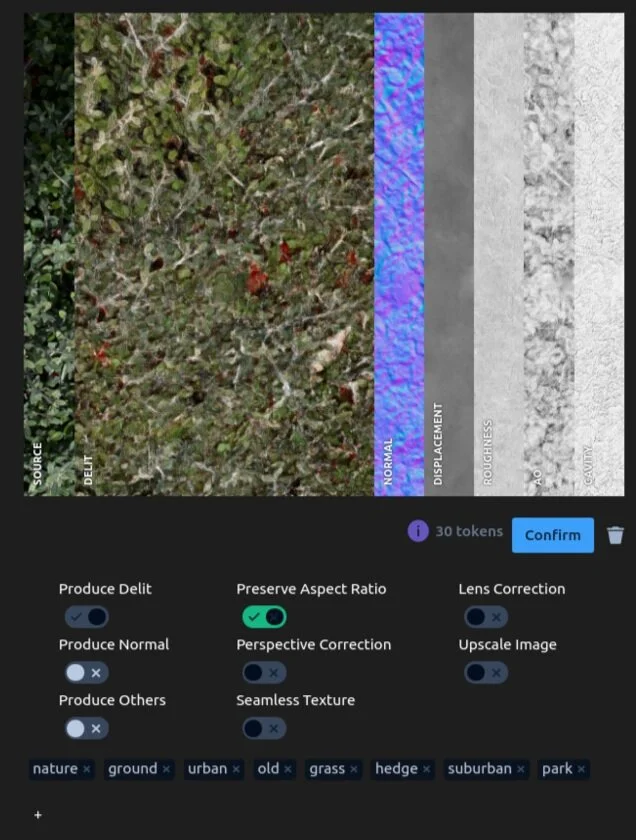

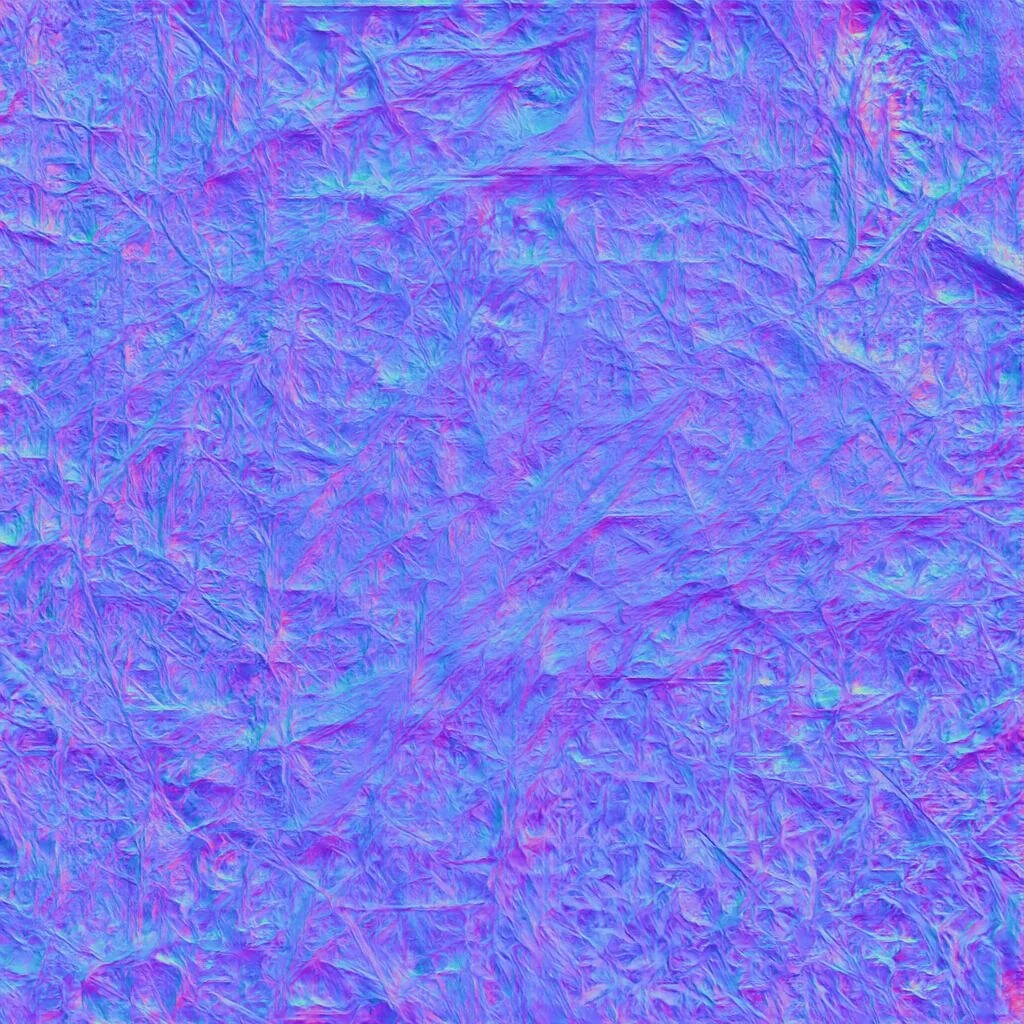

deepPBR (deep learning driven physically based rendering image extrapolation) is an intelligent image processing tool that aids in the creation of textures for use in the VFX industry. Our deep learning algorithms extrapolates all the required textures for a PBR workflow. Most importantly, its contextually aware and consistent across the board. Albedo, roughness, normals, displacement, ambient occlusion and cavity. We are investigating metallic and possibly translucency in the near future too. With deepPBR, an individual aided with nothing more than a reference image, smartphone or DSLR camera can now create all the varying textures required for modern game engines and 3D packages from a single photograph. Essentially allowing an individual to create a vast and original texture library in days, an undertaking that would take a team of texture artists months.

All your PBR maps provided in a matter of seconds. Full resolution 4k textures in a minute.

What is deepPBRs current stage of development?

We are currently entering early beta in regards to both our models / API / frontend. As they say, results may vary, nonetheless we have major model revisions coming very soon in addition to fine-tuning many of our processes. You can expect to encounter UI / stability issues as we tackle infrastructure and feature creep. Because of these hassles that our early members will experience, we are introducing what we refer to as a ‘founders edition’ subscription for the first thousand members. This subscription will offer generous perks for the life of the membership, all for tolerating early stage beta. We can’t stress how important this support is to us as this number allows us to sustain development operations indefinitely.

What perks are we looking at here?

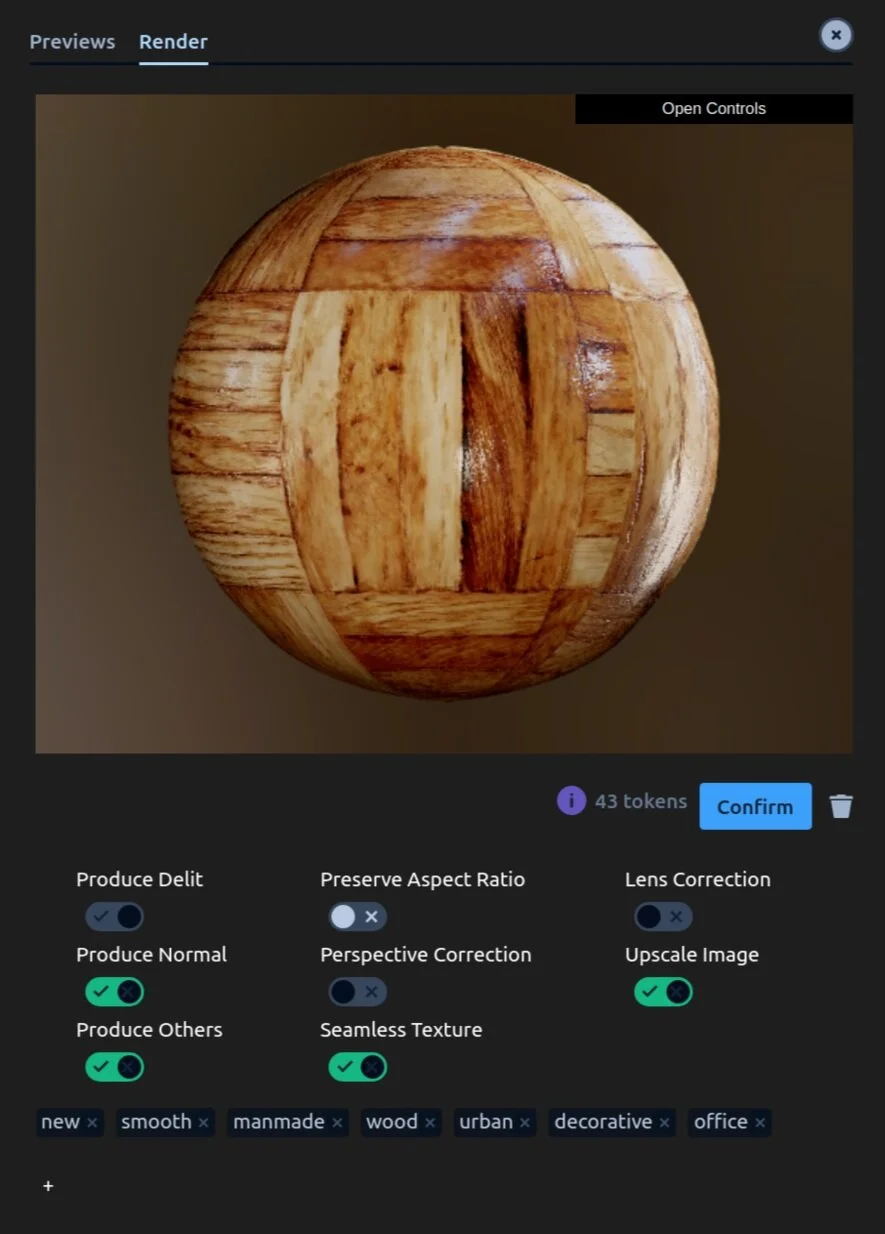

Full Post-processing is able to be catered for. Changes made here will reflect final render very soon.

Well as you continue to read this FAQ, you’ll note that we talk about many new features coming up. Just note as a ‘founders edition’ subscriber, you’ll get early access to these features. We will also be providing very generous data hosting. So plenty of cloud storage. Also you will have the ability to reprocess your assets as new algorithms come in. All ‘founder’ members will also get a lovely badge of honour on their account when sharing their content on Market Place. Continue reading, there’s a lot of very cool things coming, just know that you’ll be first to get it :)

So how much is a subscription?

At the moment we only have two tiers. One is free (which currently only allows viewing of previews) and the other being ‘founders’ which is $10 USD a month. That gives you approx 500Gb of storage and the ability to process around 40 4K textures. So 25c for a 4k texture. Quite the bargain. Once we improve efficiencies in our code we’ll be revising pricing and introducing bigger subscriptions for power-users and the ability to pay as you go. Though ‘founders’ will retain full feature-set for life.

How soon do you foresee a more stable product?

We plan for most of the kinks to be ironed out approximately a month after initial launch. 26th March 2021. There are so many user experience and performance components we plan to iron out over the next few weeks. Meta data, multi-asset select, saving of presets to allow batch operation and a far more streamlined interface will be fully under the way. Too many to mention at this time. This is really just the beginning.

What would you say are the weaknesses of deepPBR?

You can choose to only use Delit and enable Preserve Aspect Ratio. Useful for hotswaping images for delighting of photogrammetry. We will streamline this function very soon for bulk image processing.

We are a small team and have had rather limited resources to date. We have only trained on mostly rural / urban photogrammetry datasets. We lack any real ArchVis data, this is both for our normals and delighting. We really need to scale up our acquisition processes. This will happen with your support and we will increase diversity of scenes based on request. It does not take us much data to allow for many varied source photographs or reference images. Simply put, we can scan a dozen different kinds of textiles and the system can diversity that knowledge on to a much more dynamic set. Also our Displacement maps are a hot mess right now, though that will be resolved in a few weeks.

What additional features can we expect to see in the coming months?

We have an array of features coming out in addition to upgrading our models. Our deep learning is improving over time and our user feedback and human reinforcement learning aids in an ever growing experience of service. All updates will be provided on what is up and coming on our Discord.

Come on, give us a hint??

AI based Perspective Correction is a big one. This will be the most intelligent that we know of and will take into account full homography matrix correction including translation on top of perspective for nice and easily seamless tiling textures. We will also be releasing an in-painting based seamless texture methodology, though this will be some months away. We are also considering breaking away from pure PBR processes and introducing a component we refer to as deepFX. Basically we will be introducing a vast array of AI based image manipulation processes. Super-sampling, Chromatic Alteration Removal, Intelligent Archival Repair, Black & White to Colour, the list goes on. Seeing we have the infrastructure in place, it really will only take us a few weeks per feature to introduce new GANS as we source the training data.

Outside of texture creation, what other immediate applications does deepPBR have?

Well as someone who deals with photogrammetry. We have introduced the ability to essentially remove lighting from your photographs. This would be best to be implemented as an API for such products like Reality Capture, though for now you can simple brute force every photograph and hotswap when baking your lighting maps. We can also provide quite accurate roughness maps from UV textures too. Once integrated into 3rd party packages, the ability to create delit and roughness will be a huge win for photogrammetry.

Why did you choose to have deepPBR as a cloud service?

Though we have spent little time on archvis. Our system does know what floor boards are and what properties they should have. And all on a very limited dataset at this point.

We will be offering major studios a black-box solution down the line, though for now we really do see many benefits running in the cloud. Primarily as a photographer myself who travels a lot (not so much recently), to have all my assets in one place and to be able to access any computer terminal to essentially do extremely expensive computational tasks just makes sense. Also AWS did invest a large amount of resources earlier in this project, so we’re paying it back. As a big thank you.

Will deepPBR be introducing an API?

Yes. Our first usecase for this API will be an iPhone app for deepPBR, we will also be allowing 3rd parties our AI solutions. We are very interested in including our delighting tool for the photogrammetry community from within popular photogrammetry applications. Looking at you, our good friends at Reality Capture.

How does deepPBR improve with user feedback?

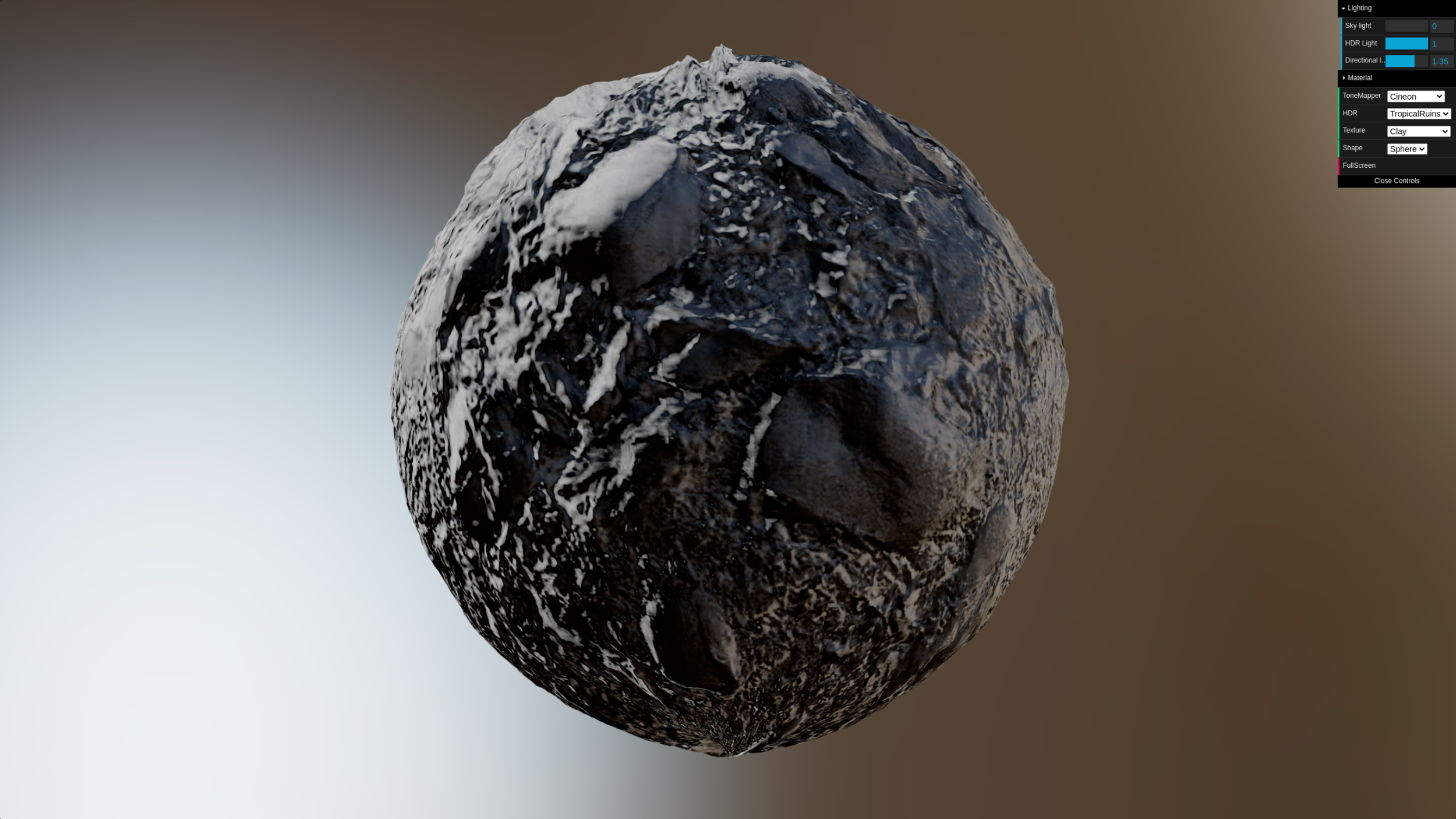

You can view your results with many HDRi environments and most common shader viewing modes. Keep in mind results are only at 1024x1024. So once rendered, quality is much higher.

Our models are trained on data that we have acquired over the years. There will always be cases where our GANs have not seen a particular style of object / scene. We encourage users to give us feedback based on fail-case scenarios. We will be implementing this directly into the user-interface over the next few weeks so you can state right there and then if a certain image does not give the desired result. We will also be introducing refunding of tokens if you choose not to download your image after reviewing the final render. This then feeds back into our analytics. We can then isolate if we in fact need to train more on that use-case.

What other methods are planned for human reinforcement learning?

When you submit an image and enter the preview parameters section for tweaking Displacement, Roughness and the rest. All these subtle tweaks will feed back into our analytics so that when other users send similar looking images, suggestions will be offered over time. This will be some months away as we really do need to gather a large pool of data before training this style of CNN. We do think this will be a huge feature. Basically intelligent suggestions based on thousands of users feedback.

The AI is really quite intelligent. Both for tagging and understanding what material maps you are aiming for.

What are the tags used for?

Tags are used for search. An easy way for you to search your database. However, we encourage that if the tags are incorrect, that you do suggest your own. Very similar to the human reinforcement learning for tweaking of an image. This will mean when other people submit similar images, the tagging system will get better over time. We’ll be retraining this model constantly to improve image classification results. As they currently stand, it needs work.

What is Marketplace and when will it be available?

All members will have the ability to opt into Marketplace in the very near future. If you like your textures and would love for others to have access to them, this will be an option. We will also be using the “tags'' that yourself and the AI suggests to allow other people to easily find what they are looking for. Marketplace textures will be expected to be curated by our members and will be subjected to community review and policing. So no nude pics.

What do I gain from contributing to Marketplace?

Marketplace Textures will be able to be purchased from other members. The members submitting these textures will get the lion-share of the revenue while deepPBR will take a commission for hosting / additional processing. We are still working out our exact pricing model. We simply need to work out our AWS math before we can announce exact pricing. It is fair to say however that the pricing will be very competitive to competing services. We also think this is a great way for texture artists to actually get paid. As a musician myself I know a think or two about not getting paid royalties for airtime.

Will Marketplace expand?

Yes. Just as we are creating a marketplace for PBR textures. We’ll also be expanding it to photography in general. We see our future service being similar to shutterstock though completely community driven content where the photographer gains the vast majority of the revenue. We also plan to expand the service to include whole entire photogrammetry environments too. Though this is a little ahead of the game, a concept we refer to as actualreality.io - I discuss all of this in my TEDx talk from late 2019. So this has been on the cards for quite some time.